Now we have a quick look at all image sizes to decide on the final aspect ratio: identify png/* # Hardlink the ones that are already PNG.

There's a bit more to creating slideshows than running a single ffmpeg command, so here goes a more interesting detailed example inspired by this timeline.

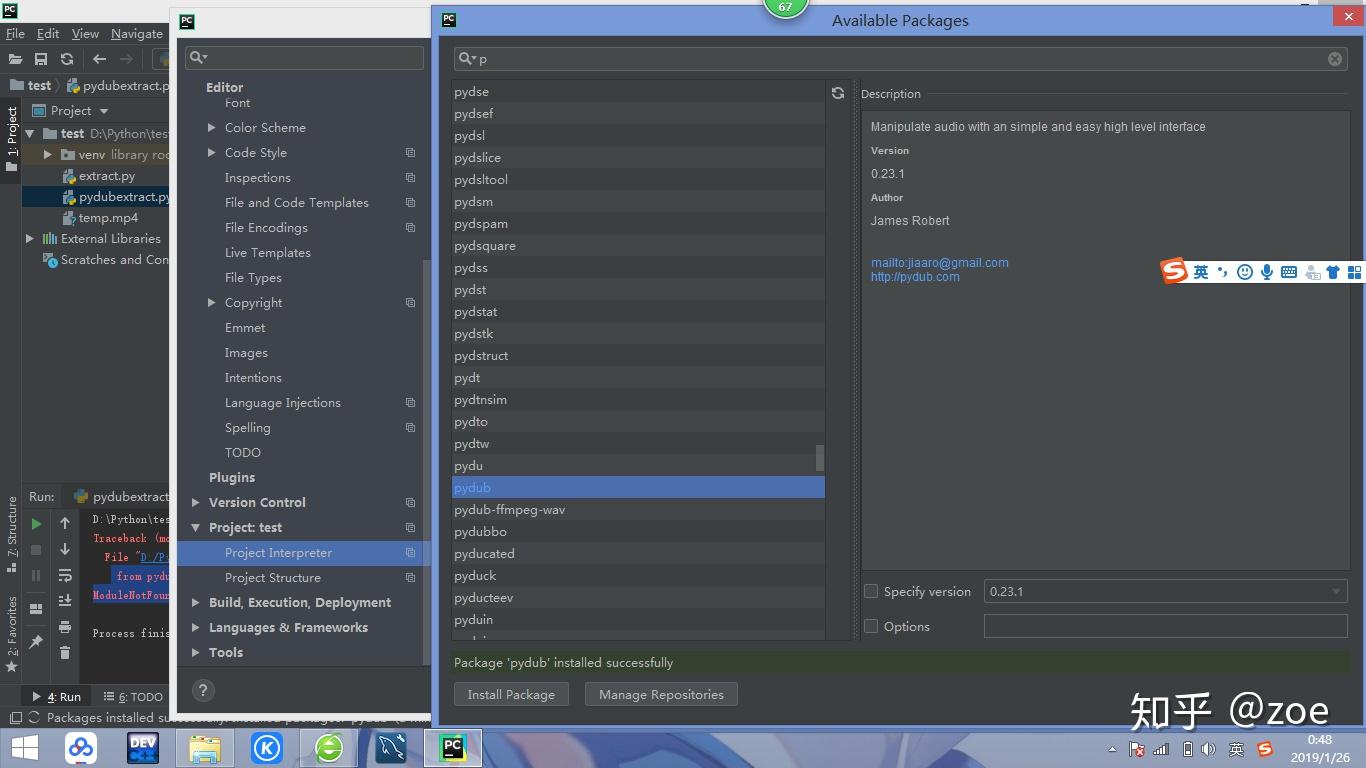

In particular, the width has to be divisible by 2, otherwise conversion fails with: "width not divisible by 2".įull realistic slideshow case study setup step by step I would also first ensure that all images to be used have the same aspect ratio, possibly by cropping them with imagemagick or nomacs beforehand, so that ffmpeg will not have to make hard decisions. Your images should of course be sorted alphabetically, typically as: 0001-first-thing.jpg c:a copy -shortest -c:v libtheora -r 30 -pix_fmt yuv420p out.ogv c:a copy -shortest -c:v libx264 -r 30 -pix_fmt yuv420p out.mp4īe a hippie and use the Theora patent-unencumbered video format in an OGG container: ffmpeg -framerate 1 -pattern_type glob -i '*.png' -i audio.ogg \ c:v libx264 -r 30 -pix_fmt yuv420p out.mp4Īdd some music to it, cutoff when the presumably longer audio when the images end: ffmpeg -framerate 1 -pattern_type glob -i '*.png' -i audio.ogg \ Slideshow video with one image per second ffmpeg -framerate 1 -pattern_type glob -i '*.png' \ It is cool to observe how much the video compresses the image sequence way better than ZIP as it is able to compress across frames with specialized algorithms:Ĭonvert one music file to a video with a fixed image for YouTube upload Images generated with: How to use GLUT/OpenGL to render to a file? These are the test media I've used: wget -O opengl-rotating-triangle.zip

i audio.ogg -c:a copy -shortest -c:v libx264 -pix_fmt yuv420p out.mp4 Normal speed video with one image per frame at 30 FPS ffmpeg -framerate 30 -pattern_type glob -i '*.png' \Īdd some audio to it: ffmpeg -framerate 30 -pattern_type glob -i '*.png' \ This great option makes it easier to select the images in many cases.

0 kommentar(er)

0 kommentar(er)